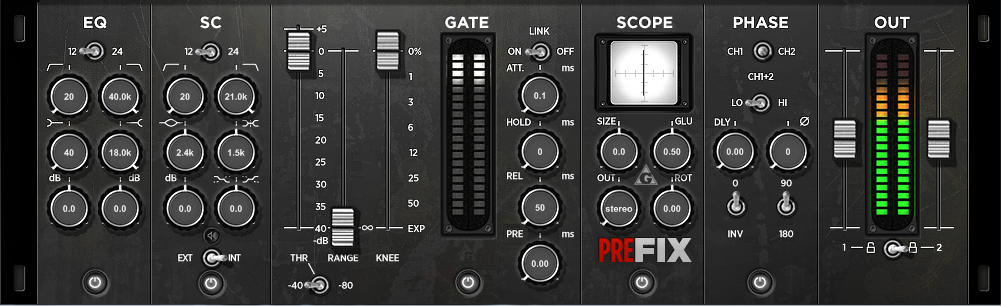

The 1.2 update introduces VST3 support and fixes an issue in the phase section (flipped 90/180 degree settings). The update also provides online documentation.

The update is available for Windows in VST and VST3 format as freeware. Download your copy here.

Recent Comments